High-performance, low-power, and low-latency processing is needed to perform image processing algorithms in next-generation Augmented Reality Digital Vision Systems. Existing helmet systems and tactical operator head-mounted displays (HMD) are under continuing pressure to improve resolution and performance, while also under pressure to go digital in order to reap the benefits of information and multi-source fusion. But, digital imaging is lagging far behind analog technology in terms of fundamental operability--imaging latency at high resolutions is simply not acceptable in existing digital technology, and the user community rightfully resists moving to digital imaging until the latency problem can be solved. Perceptive Innovations is currently performing research which is proving to change that situation, creating high-performance digital imaging and future fusion applications, in a scalable small-SWaP product footprint leveraging commercial off-the-shelf (COTS) technology. Our objective is to develop architectures and technology for an embedded processor capable of implementing the image processing algorithms required for a digital head-mounted display for dismounted operators, at low enough latency for them to be usable without inducing operator fatigue and nausea.

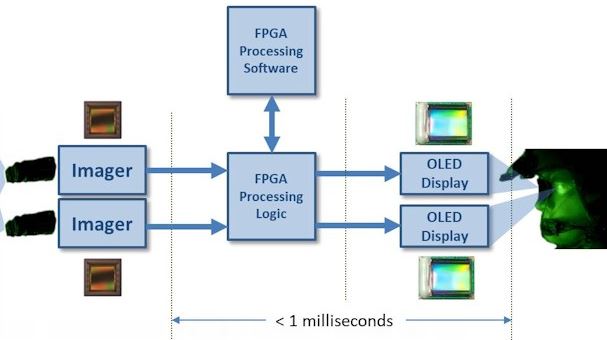

Perceptive Innovations has develoed a technique for performing typical image processing functions such as Bayer-to-RGB Demosaicing, Noise Filtering, warp correction of lens distortion, and offset parallax correction with almost theoretically minimal processing latency. This technique is scalable to high resolutions and frame rates and multiple video streams, with only microseconds of added latency. To implement this we are leveraging the latest FPGA SoC devices, with power levels that permit the creation of multispectral augmented reality night vision goggles running on battery power. Flash Pixel Processing was devised on the USAF LLEVS STTR Program, and we are currently building prototypes that leverage it on the USAF BAARS Program.

It is now possible to conceive of a common mission payload that can perform both RF and optical synthetic aperture imaging. Such a system would be able to deliver the best of both worlds, combining the strengths and mitigating the weaknesses of each sensing modality. But, such systems are currently completely independent "stovepipes", and the combined Size, Weight, and Power (SWaP) of the two systems loosely integrated onto a platform would likely prohibit usage on smaller platforms and UAS. To this end, we are currently researching with our partners the potential tradespace of how to combine these two sensing modalities into a common sensor, in particular an overlapping set of algorithms on a common signal processor. We are confident that such a system will have both SWaP and performance benefits. Our objective is to develop a common, real-time processing concept and demonstrate the feasibility of the approach for providing significant improvements in common processing for high resolution imaging.